Why people are worried about Microsoft's AI that makes photos come to life

Watch: Microsoft's new 'deepfake' video generator in action

A new Microsoft generative AI system has highlighted how advanced deepfake technology is becoming - generating convincing video from a single image and audio clip.

The tool takes an image and turns it into a realistic video, along with convincing emotions and movements such as eyebrows raising.

One demo shows off the Mona Lisa coming to life and singing Lady Gaga’s Papparazzi - Microsoft says the system was not specifically trained to handle singing audio, but does so. But the ability to generate video from a single image and audio file has alarmed some experts.

Microsoft has not yet revealed when the AI system will be released to the general public. Yahoo spoke to two AI and privacy experts about the risks of this sort of technology.

What's significant about this new technology?

The VASA system (which stands for 'visual affective skill') allows users to prompt where the fake person is looking, and what emotions they are displaying on screen. Microsoft says that the tech paves the way for ‘real time’ engagement with realistic talking avatars.

Microsoft says, ‘Our premiere model, VASA-1, is capable of not only producing lip movements that are exquisitely synchronised with the audio, but also capturing a large spectrum of facial nuances and natural head motions that contribute to the perception of authenticity and liveliness.’

Why are some people worried?

Not everybody is enamoured with the new system, with one blog describing it as a ‘deepfake nightmare machine’. Microsoft has emphasised the system is a demonstration and says there are currently no plans to release it as a product.

But while VASA-1 represents a step forward in animating people, the technology is not unique: audio start-up Eleven Labs allows users to create incredibly realistic audio doppelgangers of people, based on just 10 minutes of audio.

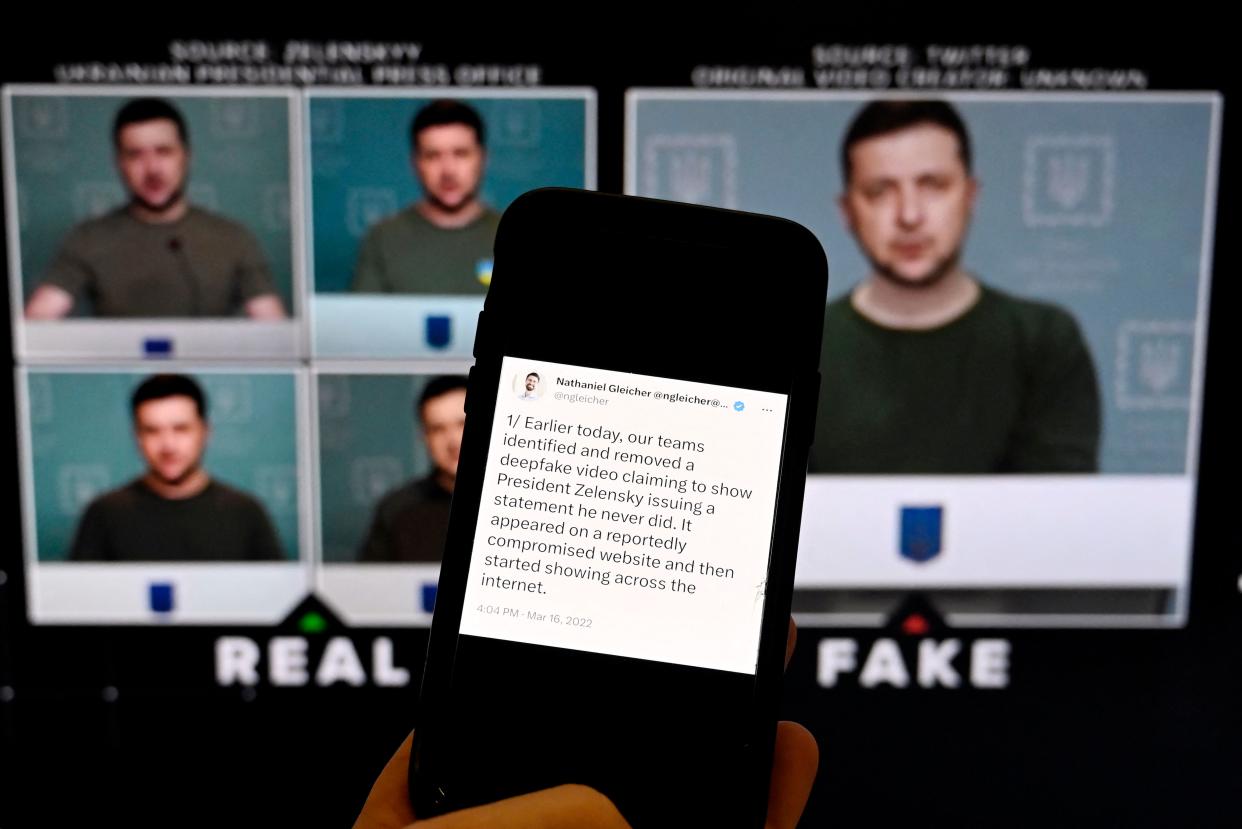

Eleven Labs’ technology was used to create a ‘deepfake’ audio clip of Joe Biden by ‘training’ a fake version on publicly available audio clips of the President, and then sending a fake audio clip of Biden urging people not to vote. The incident, which saw a user banned from Eleven Labs, highlighted how such technology can easily be used to manipulate real events.

In another incident, a worker at a multinational firm paid out $25 million to fraudsters after a video call with multiple other members of staff where everyone was a deepfake. Deepfakes are becoming increasingly common online, with one survey by Prolific finding that 51% of adults said they had encountered deepfaked video on social media.

Simon Bain, CEO of OmniIndex, says, 'Deepfake technology is on a mission to produce content that contains no clues or ‘identifiable artifacts’ to show that it is fake. The recent VASA-1 demo is the latest such development offering a significant step towards this, and Microsoft’s accompanying ‘Risk and responsible AI considerations’ statement suggests this drive for perfection, saying:

“Currently, the videos generated by this method still contain identifiable artifacts, and the numerical analysis shows that there's still a gap to achieve the authenticity of real videos.”

'Personally, I find this deeply alarming, as we need these identifiable artifacts to prevent deepfakes from causing irreparable harm.

What are the telltale signs you are looking at a deepfake?

Tiny signs like inconsistencies in skin texture and flickers in facial movements can give away you are looking at a deepfake, Bain says. But soon, even those may go away, he explains.

Bain says, Only these possible inconsistencies in skin texture and minor flickers in facial movements can visually tell us about a video’s authenticity. That way, we know that when we’re watching politicians destroy their upcoming election chances, it’s actually them and not an AI deepfake.

'This begs the question: why is deepfake technology seemingly determined to eliminate these and other visual clues as opposed to ensuring they remain in? After all, what benefit can a truly lifelike and ‘real’ fake video have other than to trick people? In my opinion, a deepfake that is almost lifelike but identifiably not can have just as much social benefit as one that is impossible to identify as fake.'

What are tech companies doing about it?

Twenty of the world's biggest tech companies, including Meta, Google, Amazon, Microsoft and TikTok signed a voluntary accord earlier this year to work together to stop the spread of deepfakes around elections.

Nick Clegg, president of global affairs at Meta said, “With so many major elections taking place this year, it’s vital we do what we can to prevent people being deceived by AI-generated content.

“This work is bigger than any one company and will require a huge effort across industry, government and civil society.”

But the broader effect of deepfakes is that soon, no one will be able to trust anything online, and companies should use other methods to 'validate' videos, says Jamie Boote, associate principal consultant at the Synopsys Software Integrity Group:

Boote said, "The threat posed by Deepfakes is that they are a way of fooling people into believing what they see and hear transmitted via digital channels. Previously, it was hard for attackers to fake someone’s voice or likeness, and even harder to do so with live video and audio. Now AI makes that possible in real time and we can no longer believe what’s on screen.

"Deepfakes open up another avenue of attacks against human users of IT systems or other non-digital systems like the stock market. This means that video calls from the CEO or announcements from PR folks can be faked to manipulate stock prices in external attacks or be used by spearphishers to manipulate employees into divulging information, changing network settings or permissions, or downloading and opening files.

"In order to protect against this threat, we have to learn to validate that the face on the screen is actually the face in front of the sender’s camera and that can be done through extra channels like a phone call to the sender’s cell phone, a message from a trusted account, or for public announcements, a press release on a public site controlled by the company.