Top Israeli spy chief exposes his true identity in online security lapse

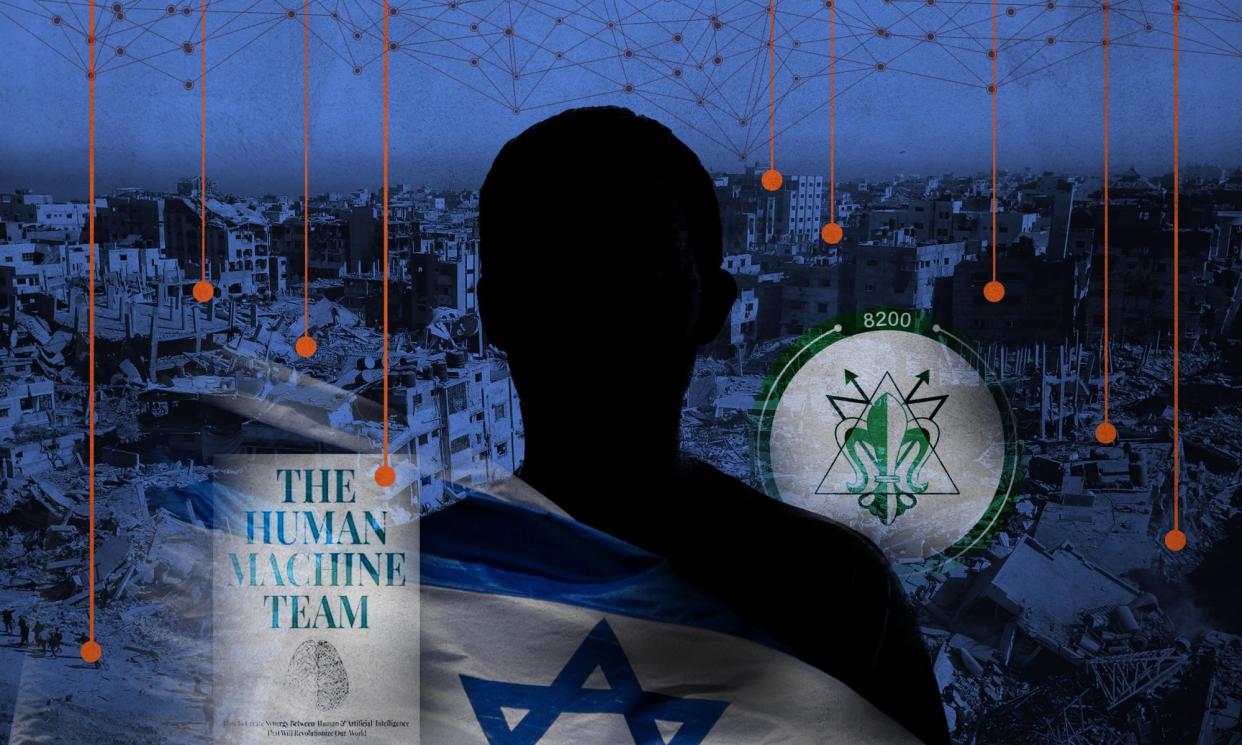

The identity of the commander of Israel’s Unit 8200 is a closely guarded secret. He occupies one of the most sensitive roles in the military, leading one of the world’s most powerful surveillance agencies, comparable to the US National Security Agency.

Yet after spending more than two decades operating in the shadows, the Guardian can reveal how the controversial spy chief – whose name is Yossi Sariel – has left his identity exposed online.

The embarrassing security lapse is linked to a book he published on Amazon, which left a digital trail to a private Google account created in his name, along with his unique ID and links to the account’s maps and calendar profiles.

The Guardian has confirmed with multiple sources that Sariel is the secret author of The Human Machine Team, a book in which he offers a radical vision for how artificial intelligence can transform the relationship between military personnel and machines.

Published in 2021 using a pen name composed of his initials, Brigadier General YS, it provides a blueprint for the advanced AI-powered systems that the Israel Defense Forces (IDF) have been pioneering during the six-month war in Gaza.

An electronic version of the book included an anonymous email address that can easily be traced to Sariel’s name and Google account. Contacted by the Guardian, an IDF spokesperson said the email address was not Sariel’s personal one, but “dedicated specifically for issues to do with the book itself”.

The security blunder is likely to place further pressure on Sariel, who is said to “live and breathe” intelligence but whose tenure running the IDF’s elite cyber intelligence division has become mired in controversy.

Unit 8200, once revered within Israel and beyond for intelligence capabilities that rivalled those of the UK’s GCHQ, is thought to have built a vast surveillance apparatus to closely monitor the Palestinian territories.

However, it has been criticised over its failure to foresee and prevent Hamas’s deadly 7 October assault last year on southern Israel, in which Palestinian militants killed nearly 1,200 Israelis and kidnapped about 240 people.

Since the Hamas-led attacks, there have been accusations that Unit 8200’s “technological hubris” came at the expense of more conventional intelligence-gathering techniques.

In its war in Gaza, the IDF appears to have fully embraced Sariel’s vision of the future, in which military technology represents a new frontier where AI is being used to fulfil increasingly complex tasks on the battlefield.

Sariel argued in the published book three years ago that his ideas about using machine learning to transform modern warfare should become mainstream. “We just need to take them from the periphery and deliver them to the centre of the stage,” he wrote.

One section of the book heralds the concept of an AI-powered “targets machine”, descriptions of which closely resemble the target recommendation systems the IDF is now known have been relying upon in its bombardment of Gaza.

Over the last six months, the IDF has deployed multiple AI-powered decision support systems that have been rapidly developed and refined by Unit 8200 under Sariel’s leadership.

They include the Gospel and Lavender, two target recommendation systems that have been revealed in reports by the Israeli-Palestinian publication +972 magazine, its Hebrew-language outlet Local Call and the Guardian.

The IDF says its AI systems are intended to assist human intelligence officers, who are required to verify that military suspects are legitimate targets under international law. A spokesperson said the military used “various types of tools and methods”, adding: “Evidently, there are tools that exist in order to benefit intelligence researchers that are based on artificial intelligence.”

Targets machine

On Wednesday, +972 and Local Call placed the spotlight on the link between Unit 8200 and the book authored by a mysteriously named Brigadier General YS.

Sariel is understood to have written the book with the IDF’s permission after a year as a visiting researcher at the US National Defense University in Washington DC, where he made the case for using AI to transform modern warfare.

Aimed at high-ranking military commanders and security officials, the book articulates a “human-machine teaming” concept that seeks to achieve synergy between humans and AI, rather than constructing fully autonomous systems.

It reflects Sariel’s ambition to become a “thought leader”, according to one former intelligence official. In the 2000s, he was a leading member of a group of academically minded spies known as “the Choir”, which agitated for an overhaul of Israeli intelligence practices.

An Israeli press report suggests that by 2017 he was head of intelligence for the IDF’s central command. His subsequent elevation to commander of Unit 8200 amounted to an endorsement by the military establishment of his technological vision for the future.

Sariel refers in the book to “a revolution” in recent years within the IDF, which has “developed a new concept of intelligence centric warfare to connect intelligence to the fighters in the field”. He advocates going further still, fully merging intelligence and warfare, in particular when conducting lethal targeting operations.

In one chapter of the book, he provides a template for how to construct an effective targets machine drawing on “big data” that a human brain could not process. “The machine needs enough data regarding the battlefield, the population, visual information, cellular data, social media connections, pictures, cellphone contacts,” he writes. “The more data and the more varied it is, the better.”

Such a targets machine, he said, would draw on complex models that make predictions built “on lots of small, diverse features”, listing examples such as “people who are with a Hezbollah member in a WhatsApp group, people who get new cellphones every few months, those who change their addresses frequently”.

He argues that using AI to create potential military targets can be more efficient and avoid “bottlenecks” created by intelligence officials or soldiers. “There is a human bottleneck for both locating the new targets and decision-making to approve the targets. There is also the bottleneck of how to process a great amount of data. Then there is the bottleneck of connecting the intelligence to the fire.” He adds: “A team consisting of machines and investigators can blast the bottleneck wide open.”

Intelligence divide

Disclosure of Sariel’s security lapse comes at a difficult time for the intelligence boss. In February, he came under public scrutiny in Israel when the Israeli newspaper Maariv published an account of recriminations within Unit 8200 after the 7 October attacks.

Sariel was not named in the article, which referred to Unit 8200’s commander only as “Y”. However, the rare public criticism brought into focus a divide within Israel’s intelligence community over its biggest failure in a generation.

Sariel’s critics, the report said, believe Unit 8200’s prioritisation of “addictive and exciting” technology over more old-fashioned intelligence methods had led to the disaster. One veteran official told the newspaper the unit under Sariel had “followed the new intelligence bubble”.

For his part, Sariel is quoted as telling colleagues that 7 October will “haunt him” until his last day. “I accept responsibility for what happened in the most profound sense of the word,” he said. “We were defeated. I was defeated.”

• Guardian Newsroom: Crisis in the Middle East

On Tuesday 30 April, 7-8.15pm GMT, join Devika Bhat, Peter Beaumont, Emma Graham-Harrison and Ghaith Abdul-Ahad as they discuss the fast-developing crisis in the Middle East. Book tickets here or at theguardian.live