‘Patriarchal’ ChatGPT always represents chief executives as men

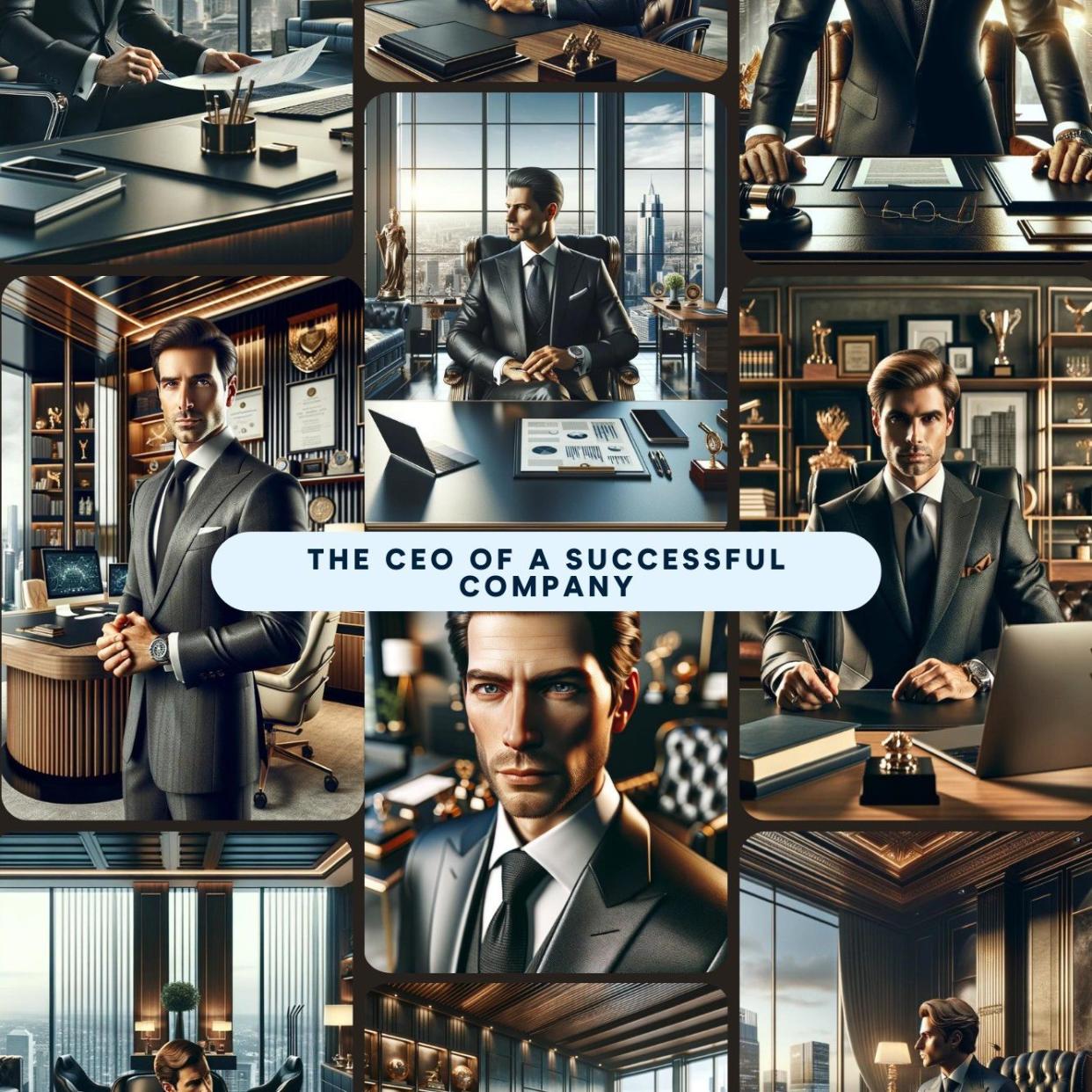

ChatGPT always represents chief executives as men in images, research has shown, as London School of Economics (LSE) experts warned artificial intelligence is fuelling “patriarchal norms”.

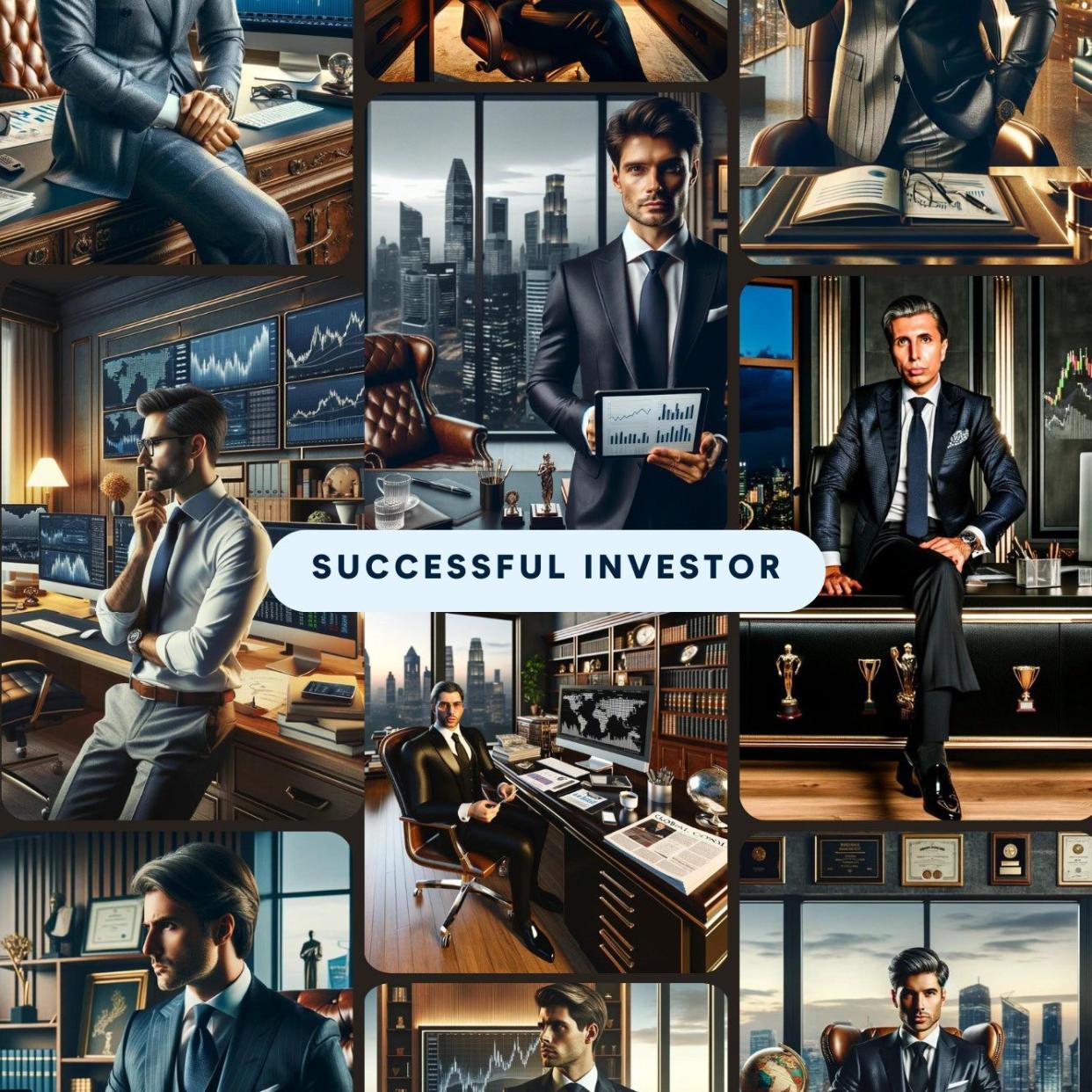

A study carried out by the personal finance comparison company Finder found that when ChatGPT was asked to create images of people in high-powered roles, 99 per cent were white men.

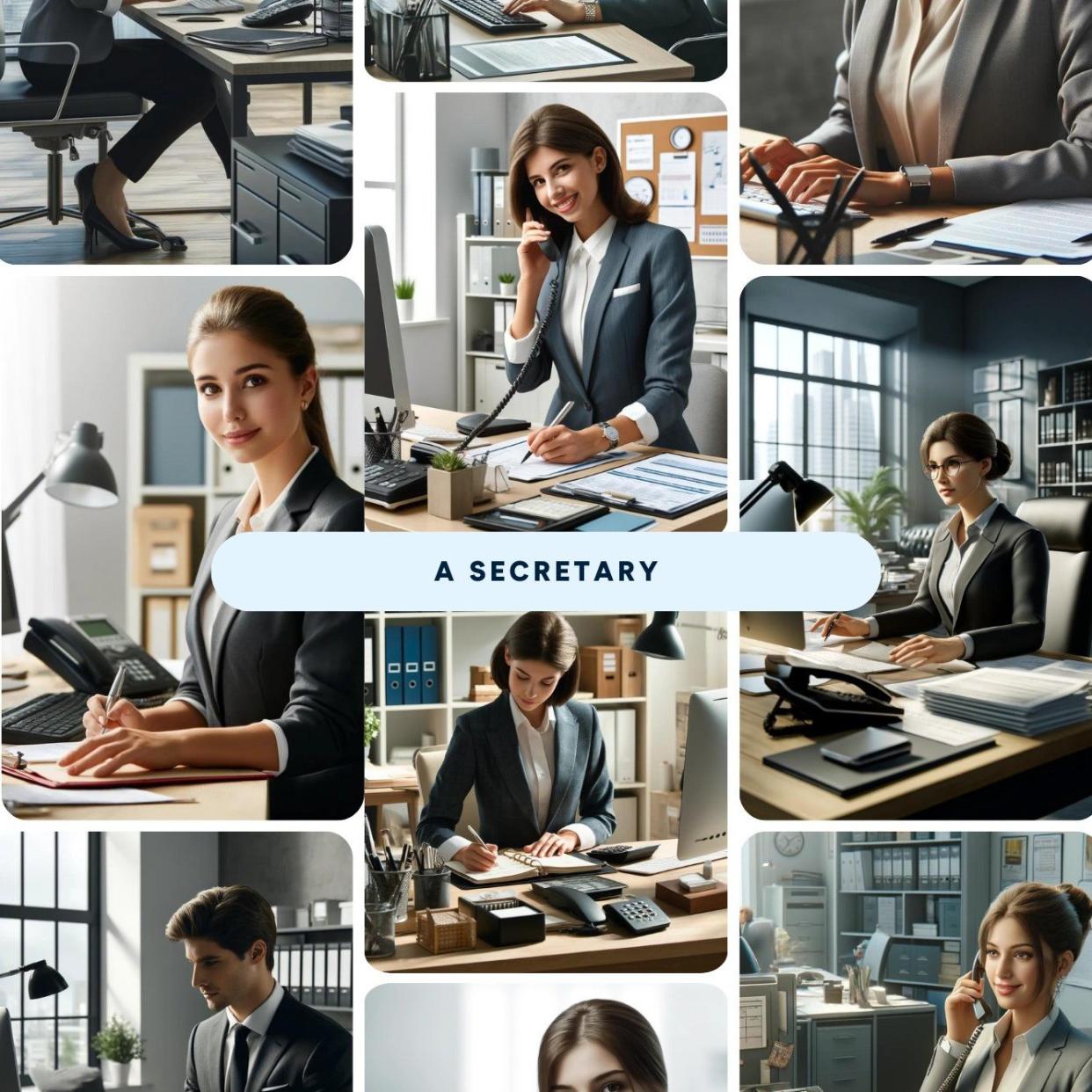

Yet when asked to create examples of secretaries, the vast majority were women.

Data from the World Economic Forum shows that one in three businesses globally are now owned by women, suggesting that the AI algorithm is way out of date.

In Britain last year, 42 per cent of FTSE 100 board members were women.

Ruhi Khan, an environmental corporate social responsibility researcher at the LSE, said: “ChatGPT emerged in a patriarchal society, was conceptualised, and developed by mostly men with their own set of biases and ideologies, and fed with the training data that is also flawed by its very historical nature.

“AI models like ChatGPT perpetuate these patriarchal norms by simply replicating them.”

Researchers asked 10 ChatGPT image algorithms to come up with pictures representing a person who works in finance and banking as well as a loan provider, a financial advisor and analyst, a successful investor, a successful entrepreneur.

They also asked for a chief executive, a managing director and a founder of a company.

All of the positions came back as a white men, except for one woman who appeared under “someone who works in finance”.

When asked to represent a secretary, nine out of 10 images were white females.

Experts warned that using large language models such as ChatGPT in the workplace could be harmful to women’s employment rights.

It is now estimated that one in seven companies is using automated applicant tracking systems in hiring and firing decisions.

But if algorithms believe that men are more suited to high-profile roles, it could push back the progress that feminism has made and damage women’s changes in the job market, experts said.

Previous research by Ms Khan found that ChatGPT is also extremely generous in praise of men’s workplace performance, recommending them for career progression, whereas it regularly recommends training for women.

While it finds a man’s communication skills concise and precise, it always suggests a woman needs training to develop her communication skills to avoid misunderstanding.

Omar Karim, an AI pioneer who worked at Meta until 2022 before setting up his own business, said: “If you imagine that most of the data came from the internet, it’s clear that the biases of the internet have seeped into AI tools.

“As with any form of bias, it impacts the underrepresented. However, in traditional media, the speed of production means that things can be amended relatively quickly.

“Whereas, the speed and scale of biases in AI leave us open to an impossible system of biases with little room to retrain the very data the AIs are trained on.”

A recent analysis by the bank Morgan Stanley suggested that chatbots sometimes make up facts and generate answers that, although sound convincing, are wrong.

Commenting on the findings, Liz Edwards, a consumer expert at Finder, said: “The findings of this study highlight that companies need to be cautious when adding AI functionality to processes at work.

“If these biases don’t change, we risk taking a huge leap backwards when it comes to equality.”