Artists may make AI firms pay a high price for their software’s ‘creativity’

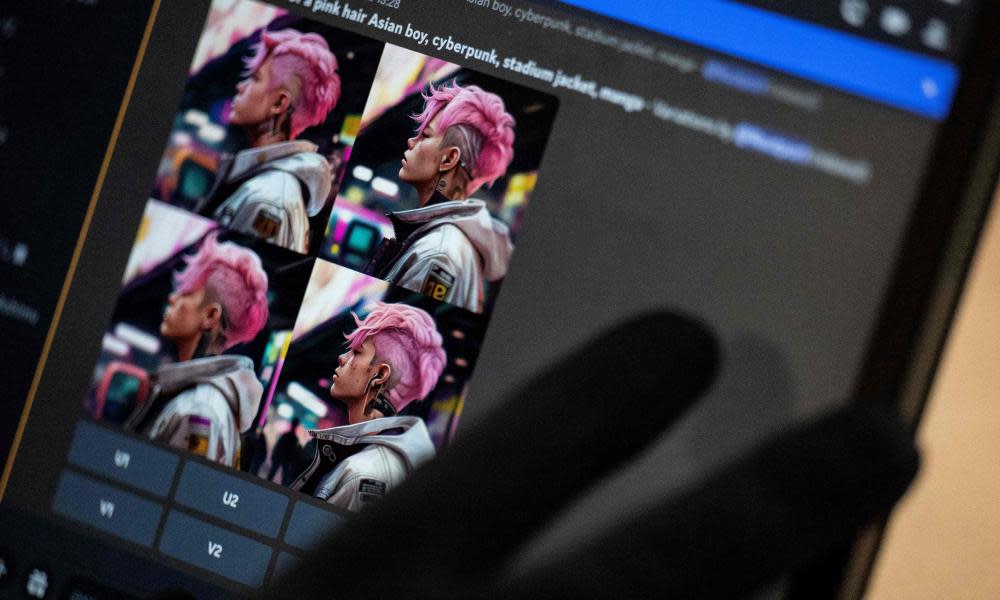

Those whom the gods wish to destroy they first give access to Midjourney, a text-to-graphics “generative AI” that is all the rage. It’s engagingly simple to use: type in a text prompt describing a kind of image you’d like it to generate, and up comes a set of images that you couldn’t ever have produced yourself. For example: “An image of cat looking at it and ‘on top of the world’, in the style of cyberpunk futurism, bright red background, light cyan, edgy street art, bold, colourful portraits, use of screen tones, dark proportions, modular” and it will happily oblige with endless facility.

Welcome to a good way to waste most of a working day. Many people think it’s magical, which in a sense it is, at least as the magician Robert Neale portrayed it: a unique art form in which the magician creates elaborate mysteries during a performance, leaving the spectator baffled about how it was done. But if the spectator somehow manages to discover how the trick was done, then the magic disappears.

So let us examine how Midjourney and its peers do their tricks. The secret lies mainly in the fact that they are trained by ingesting the LAION-5B dataset – a collection of links to upwards of 6bn tagged images compiled by scraping the web indiscriminately, and which is thought to include a significant number of pointers to copyrighted artworks. When fed with a text prompt, the AIs then assemble a set of composite images that might resemble what the user asked for. Voilà!

What this implies is that if you are a graphic artist whose work has been published online, there is a good chance that Midjourney and co have those works in its capacious memory somewhere. And no tech company asked you for permission to “scrape” them into the maw of its machine. Nor did it offer to compensate you for so doing. Which means that underpinning the magic that these generative AIs so artfully perform may be intellectual property (IP) theft on a significant scale.

The inescapable implication is that there may be serious liabilities for generative AIs coming down the line

Of course the bosses of AI companies know this, and even as I write their lawyers will be preparing briefs about whether appropriation-by-scraping is legitimate under the “fair use” doctrines of copyright law in different jurisdictions, and so on. They’re doing this because ultimately these questions are going to be decided by courts. And already the lawsuits are under way. In one, some graphic artists launched a suit against three companies for allegedly using their original works to train their AIs in their styles, thereby enabling users to generate works that may be insufficiently transformative from the original protected works – and in the process generating unauthorised derivative works.

Just to put that in context, if an AI company was aware that its training data included unlicensed works, or that its algorithms generated unauthorised derivative works not covered by “fair use”, then it could be liable for damages of up to $150,000 for each instance of knowing use. And in case anyone thinks that infringement suits by angry artists are like midge bites to corporations, it’s worth noting that Getty, a very large picture library, is suing Stability AI for alleged unlicensed copying of millions of its photos and using them to train its AI, Stable Diffusion, to generate more accurate depictions based on user prompts. The inescapable implication is that there may be serious liabilities for generative AIs coming down the line.

Now, legal redress is all very well, but it’s usually beyond the resources of working artists. And lawsuits are almost always retrospective, after the damage has been done. It’s sometimes better, as in rugby, to “get your retaliation in first”. Which is why the most interesting news of the week was that a team of researchers at the University of Chicago have developed a tool to enable artists to fight back against permissionless appropriation of their work by corporations. Appropriately, it’s called Nightshade and it “lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways” – dogs become cats, cars become cows, and who knows what else? (Boris Johnson becoming piglet, with added grease perhaps?) It’s a new kind of magic. And the good news is that corporations might find it black. Or even deadly.

What I’ve been reading

Personal organiser

Can a software package edit our thoughts? Lovely New Yorker essay by Ian Parker.

Front and centre

Joe Biden: “American leadership is what holds the world together.” Oh yeah? says Adam Tooze on his Substack blog.

Port in a storm

American Chris Arnade’s grudging tribute to the Brits he doesn’t really understand in dysfunctional Dover.